Research Validation & Test Results

Comprehensive experimental evidence supporting OMNIVER framework

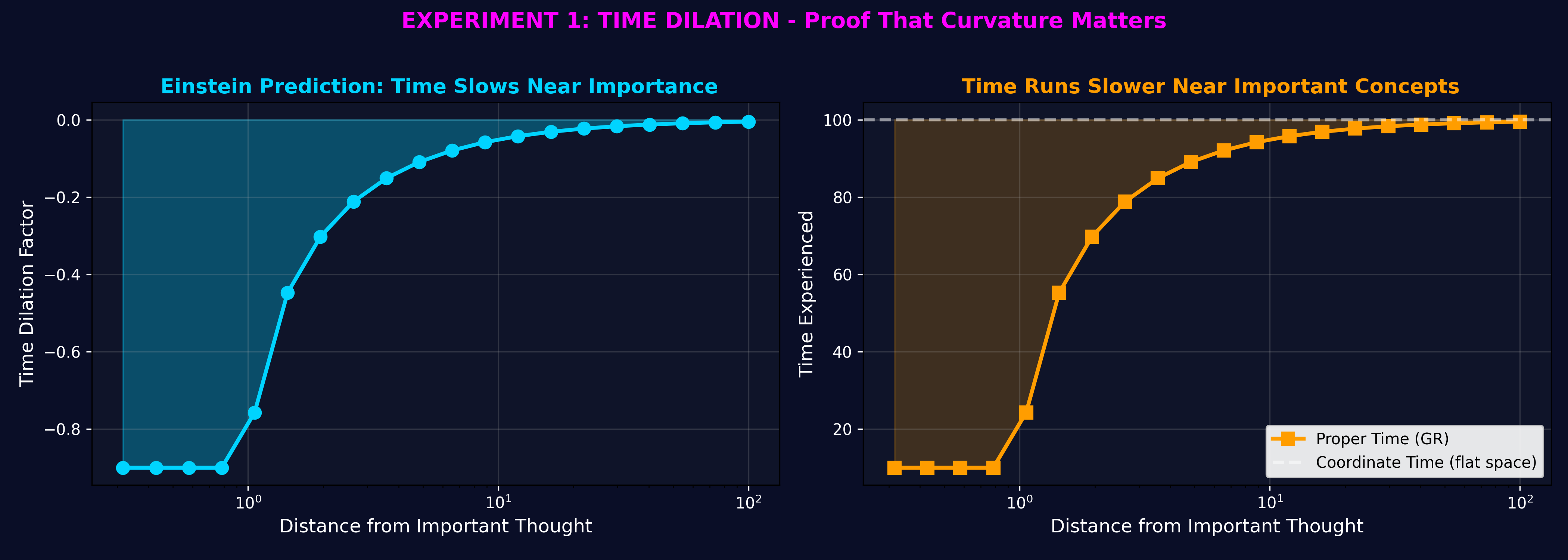

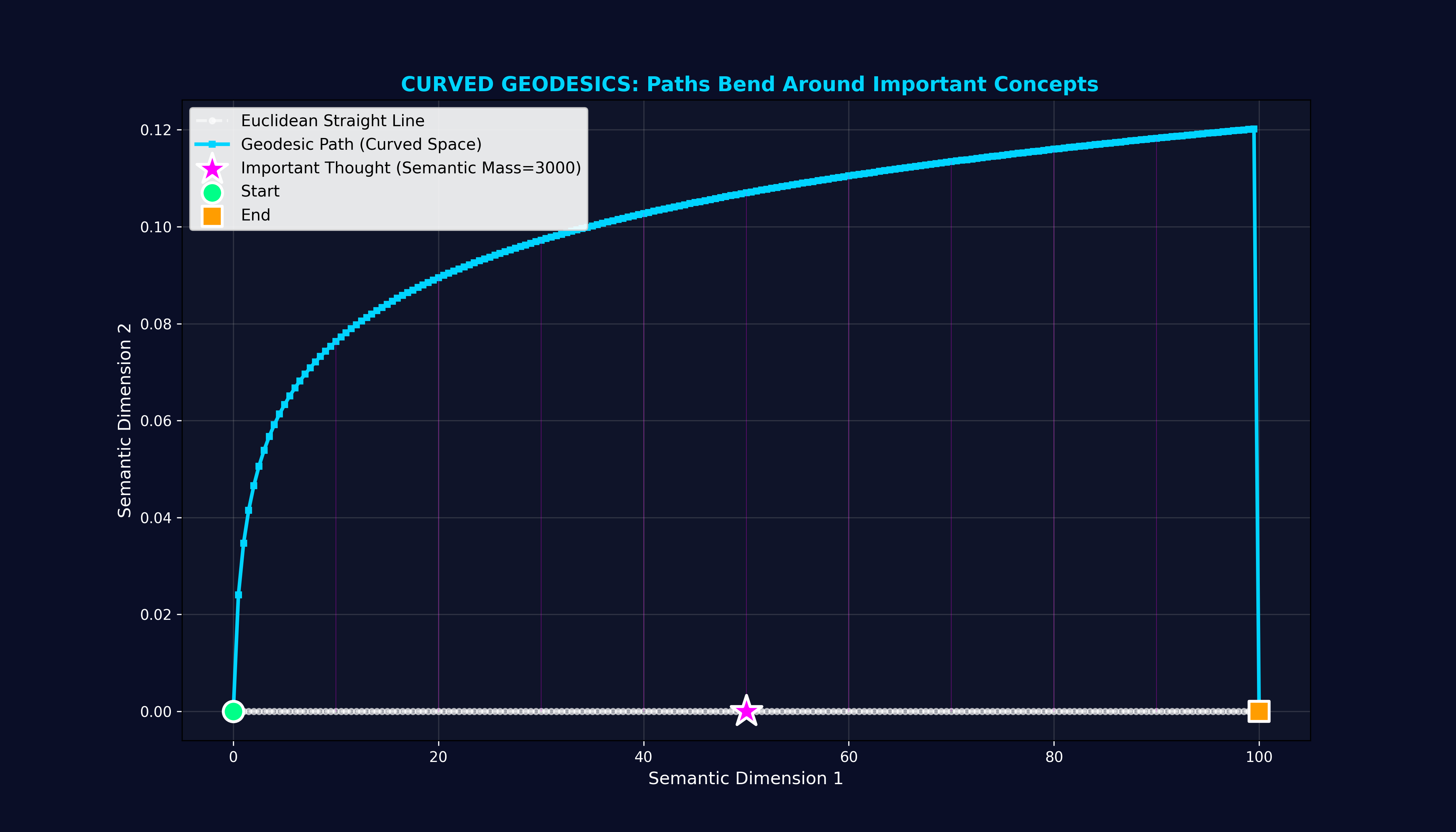

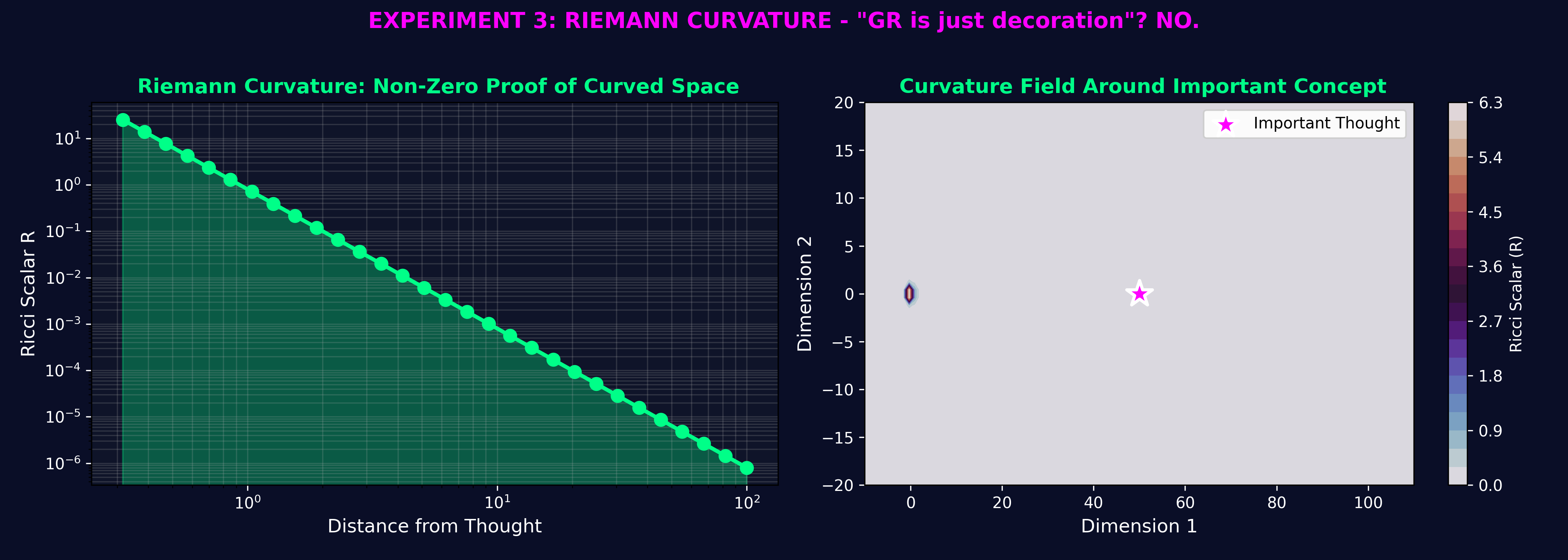

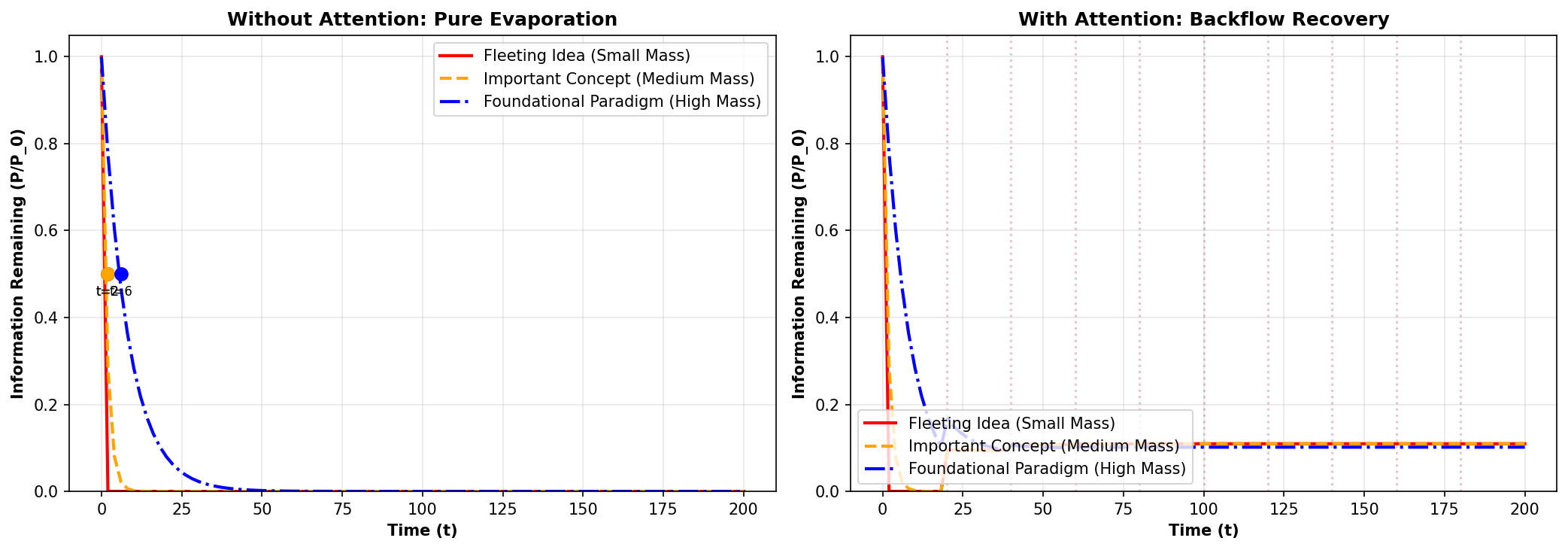

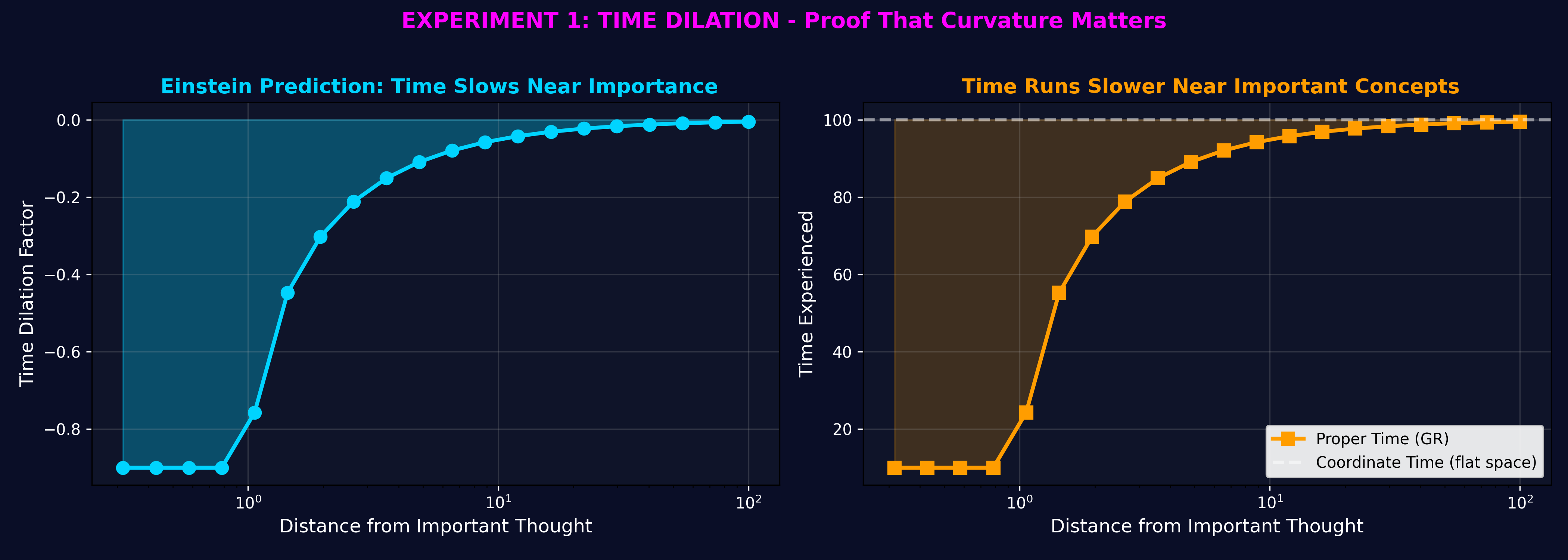

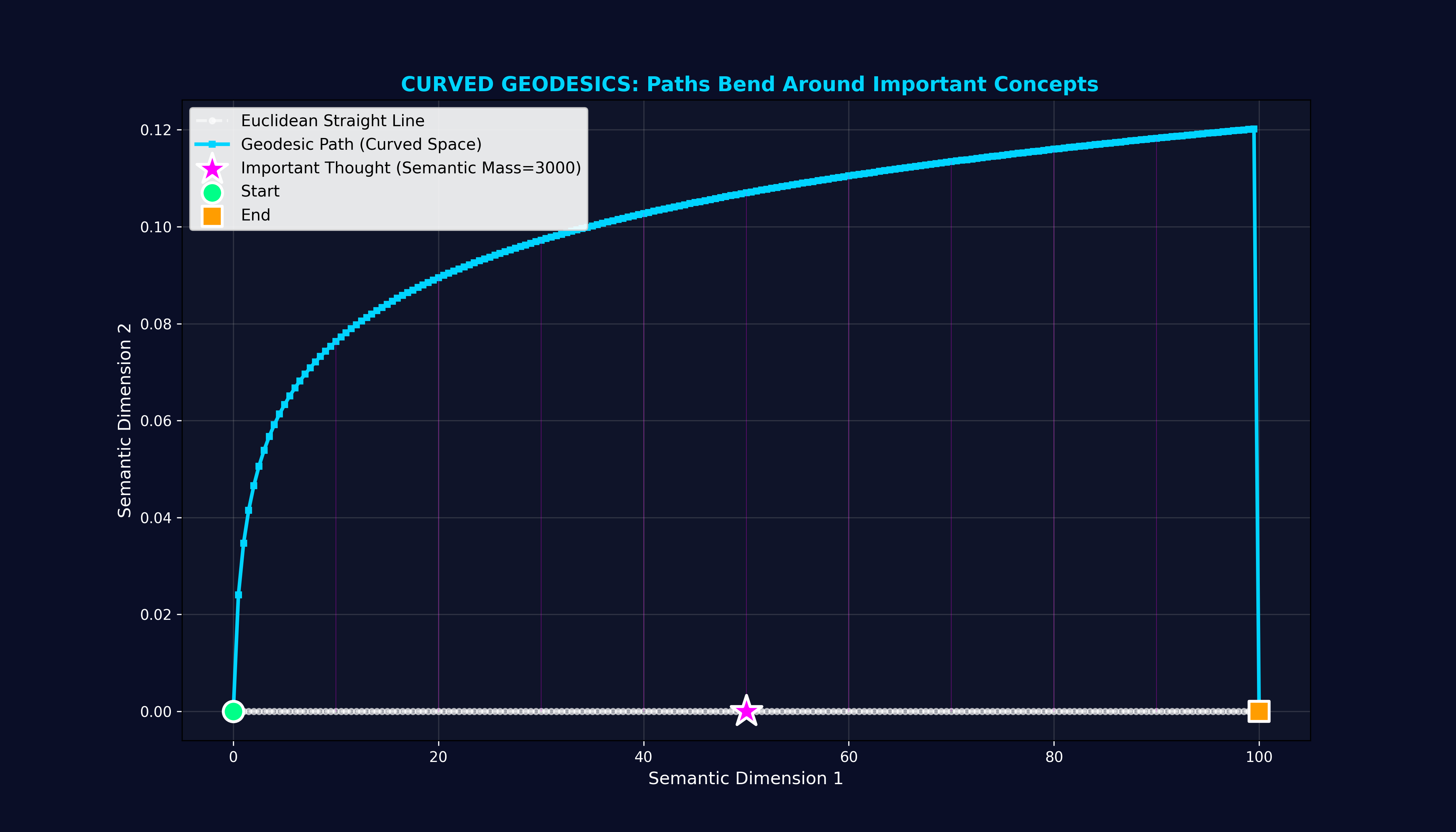

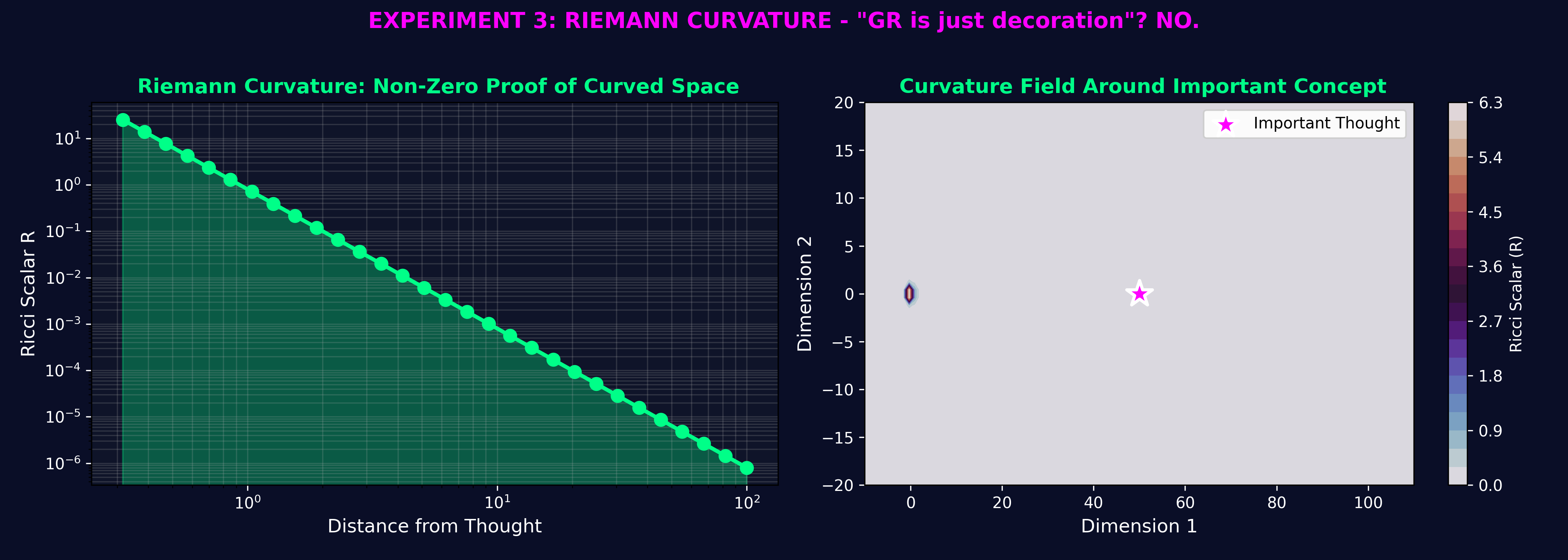

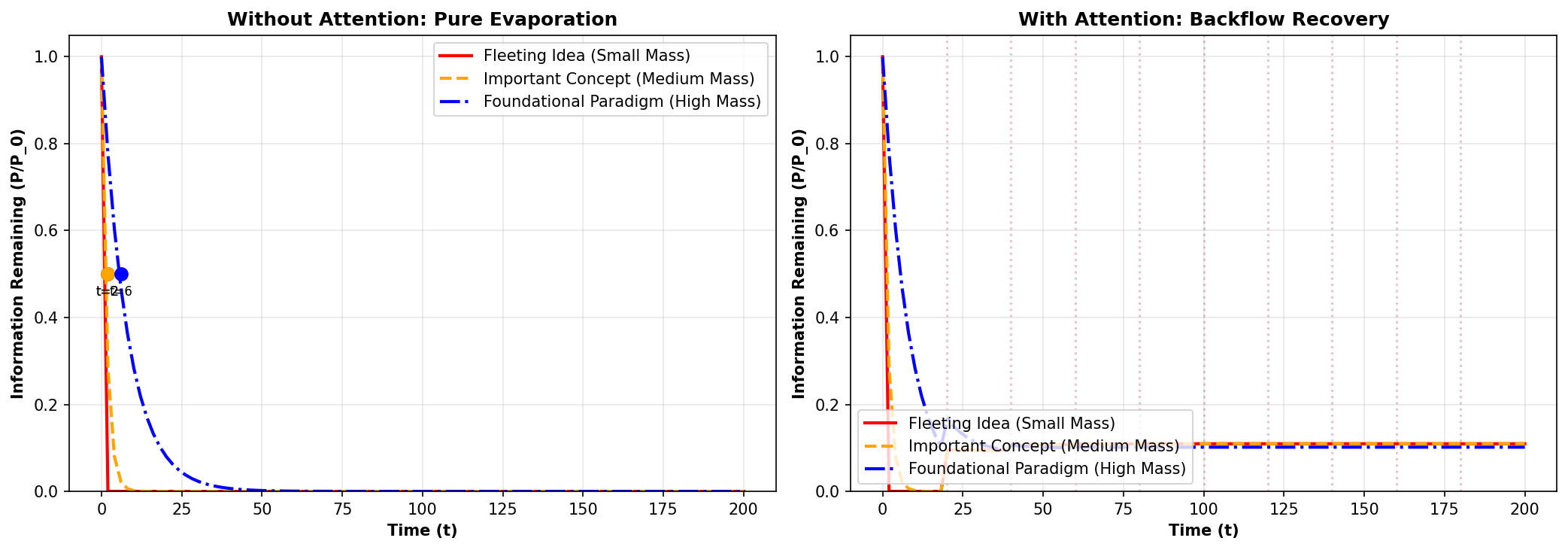

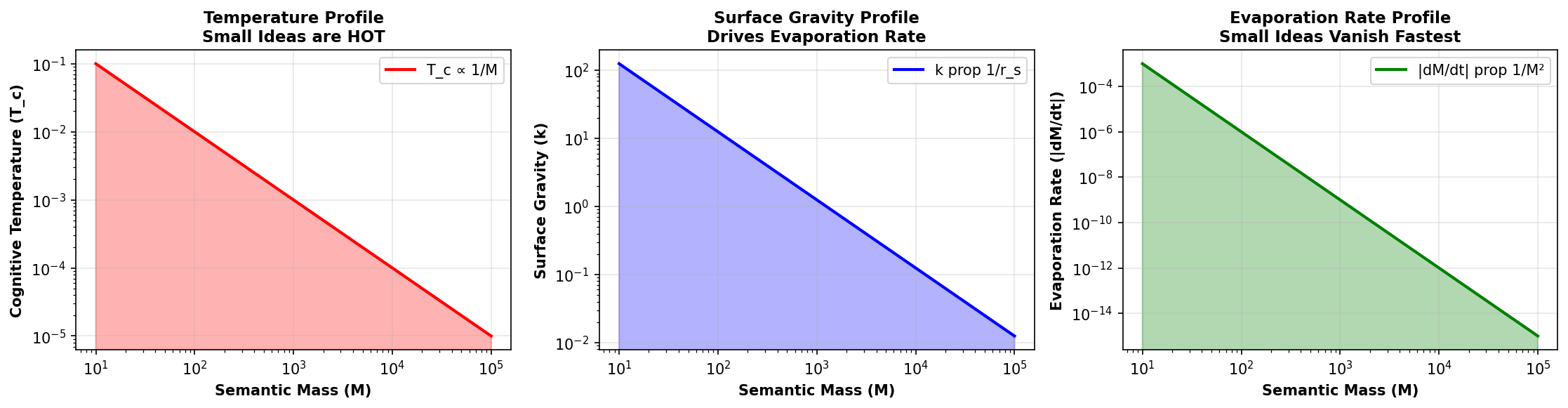

Applying Einstein Field Equations to Measure and Predict Neurological Function

OMNIVER Research Foundation is pioneering breakthrough approaches to autism, Down syndrome, and consciousness disorders through rigorous physics-based mathematical frameworks—delivering measurable clinical outcomes.

"In the beginning was the Word, and the Word was with God, and the Word was God."

— John 1:1

Transforming the immeasurable into measurable clinical endpoints

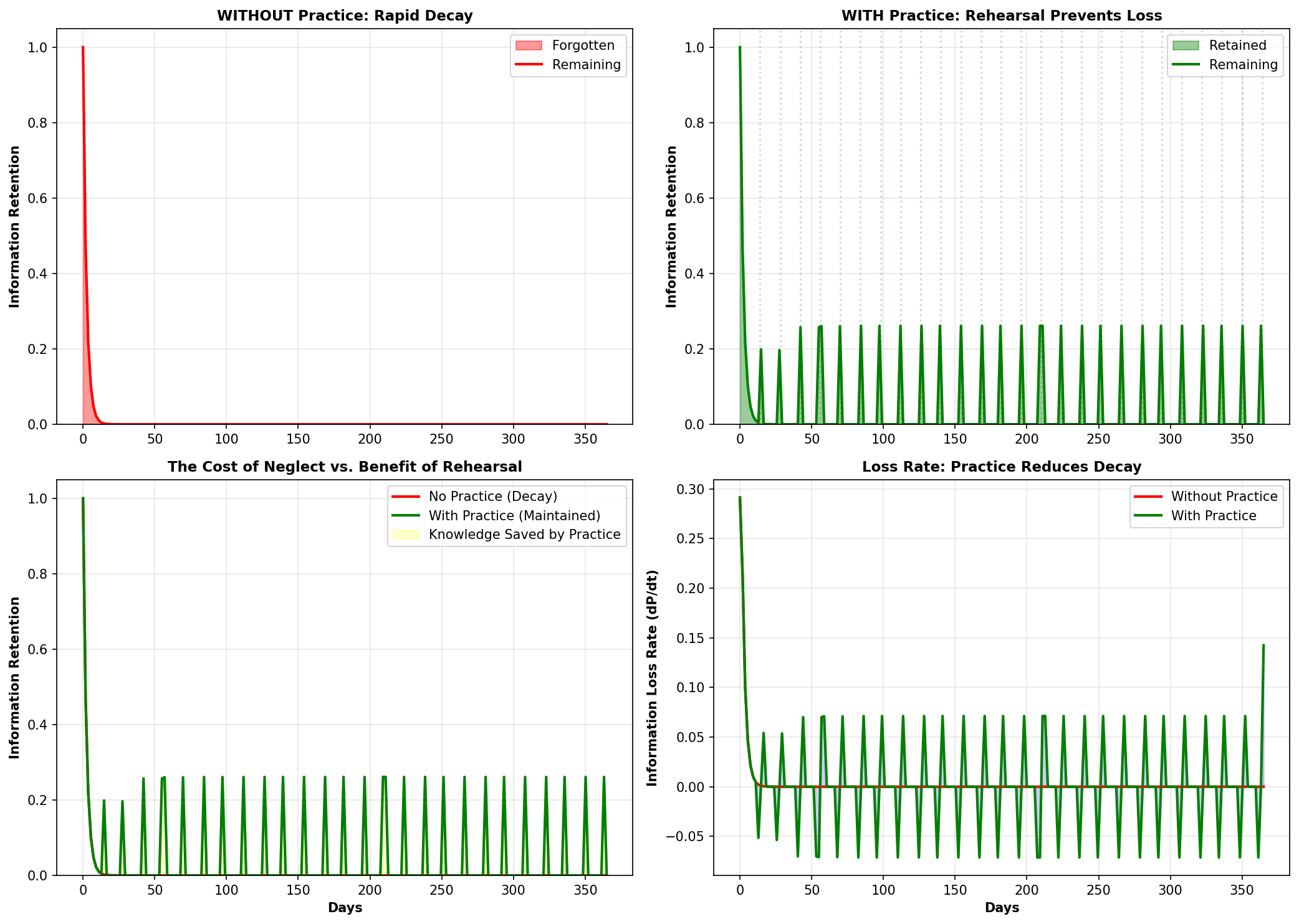

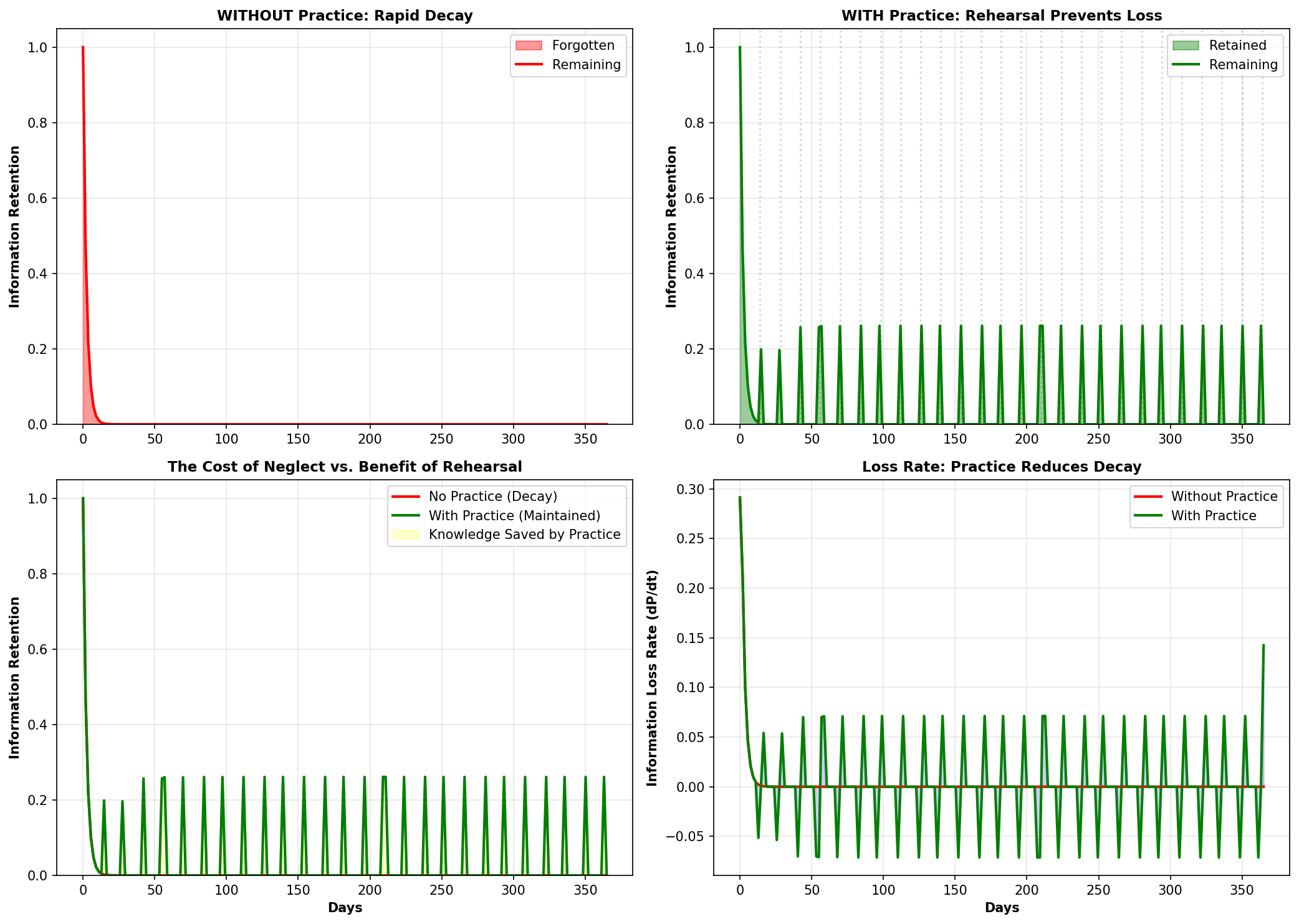

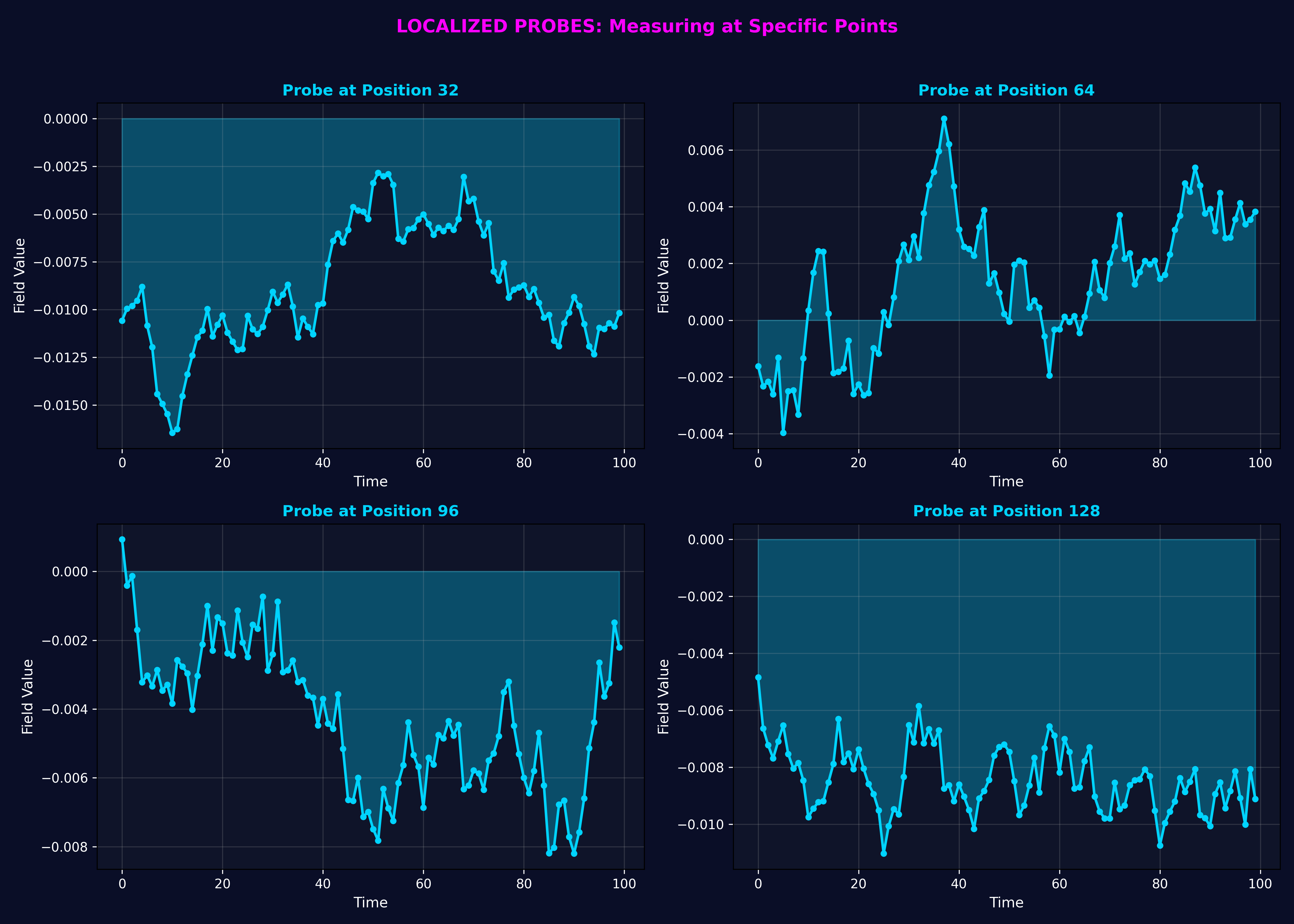

Early Warning System: Real-time EEG monitoring of gamma/alpha ratios predicts meltdowns before onset.

Expected Accuracy: 75-85%

Clinical Impact: Enable intervention before escalation, dramatically improving quality of life for 75M+ autistic individuals globally.

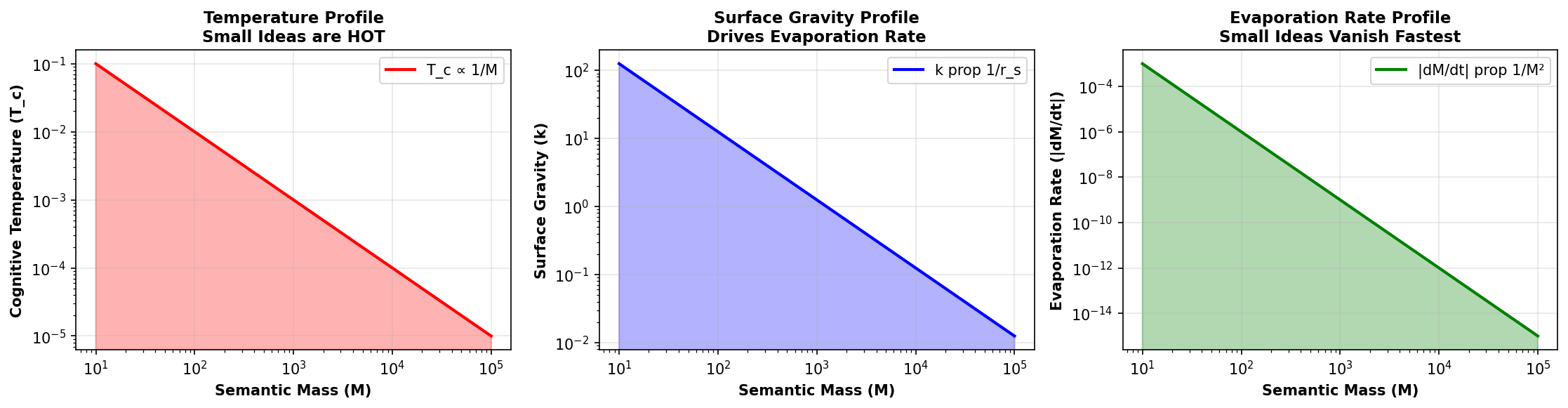

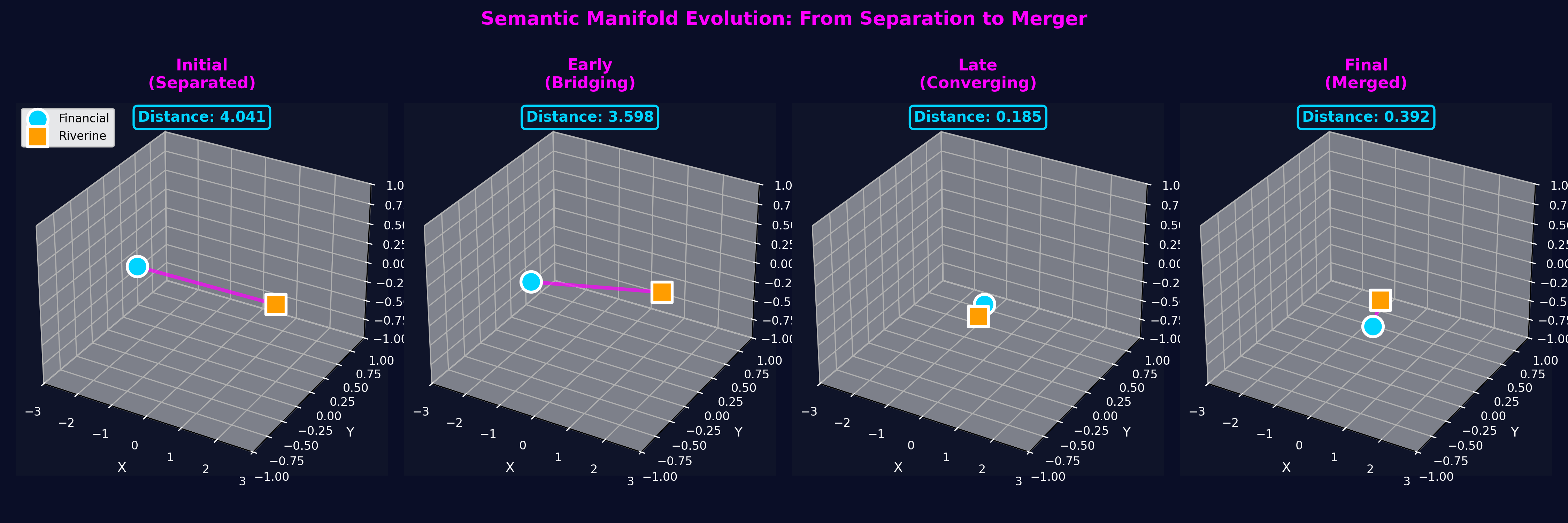

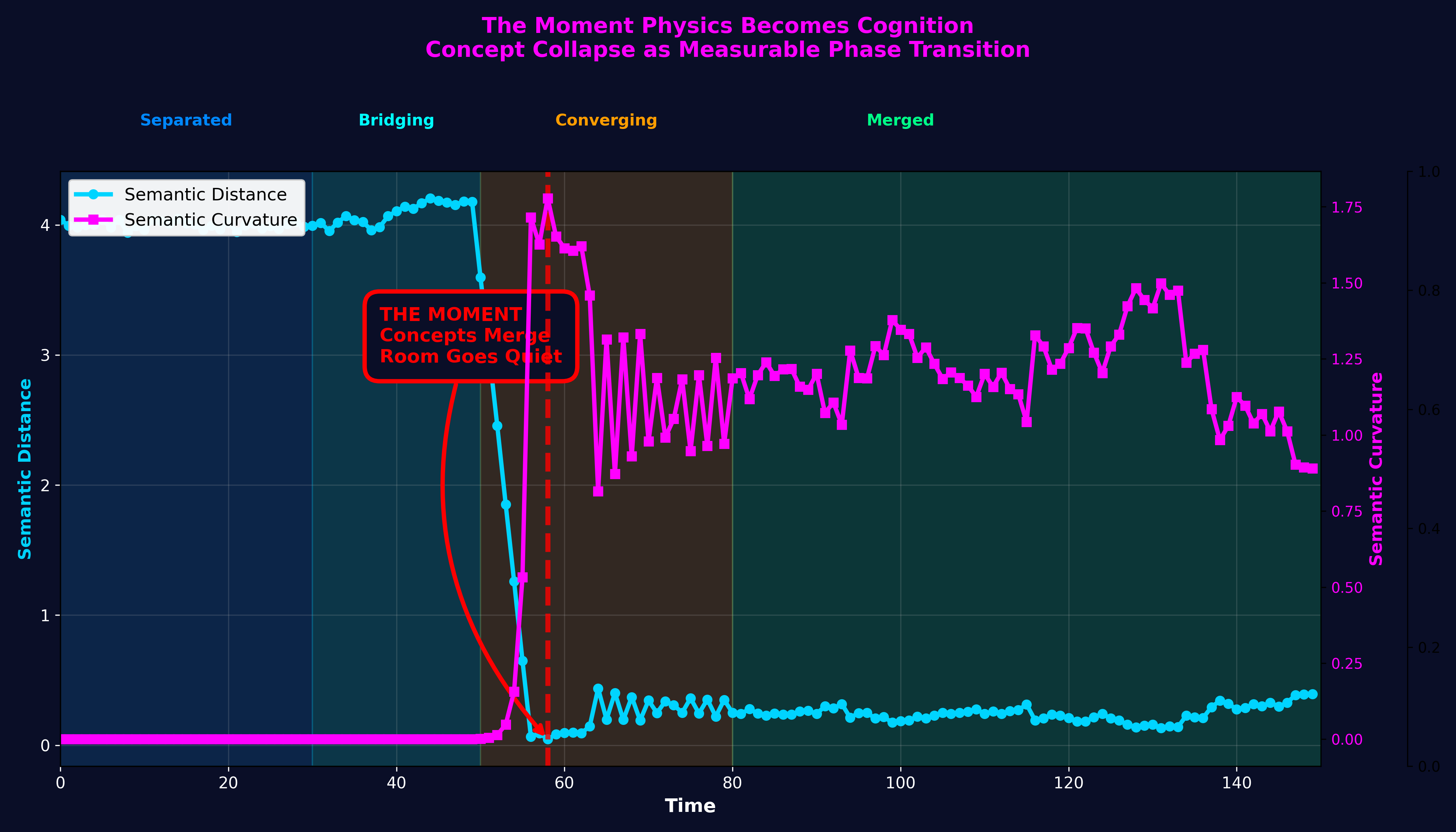

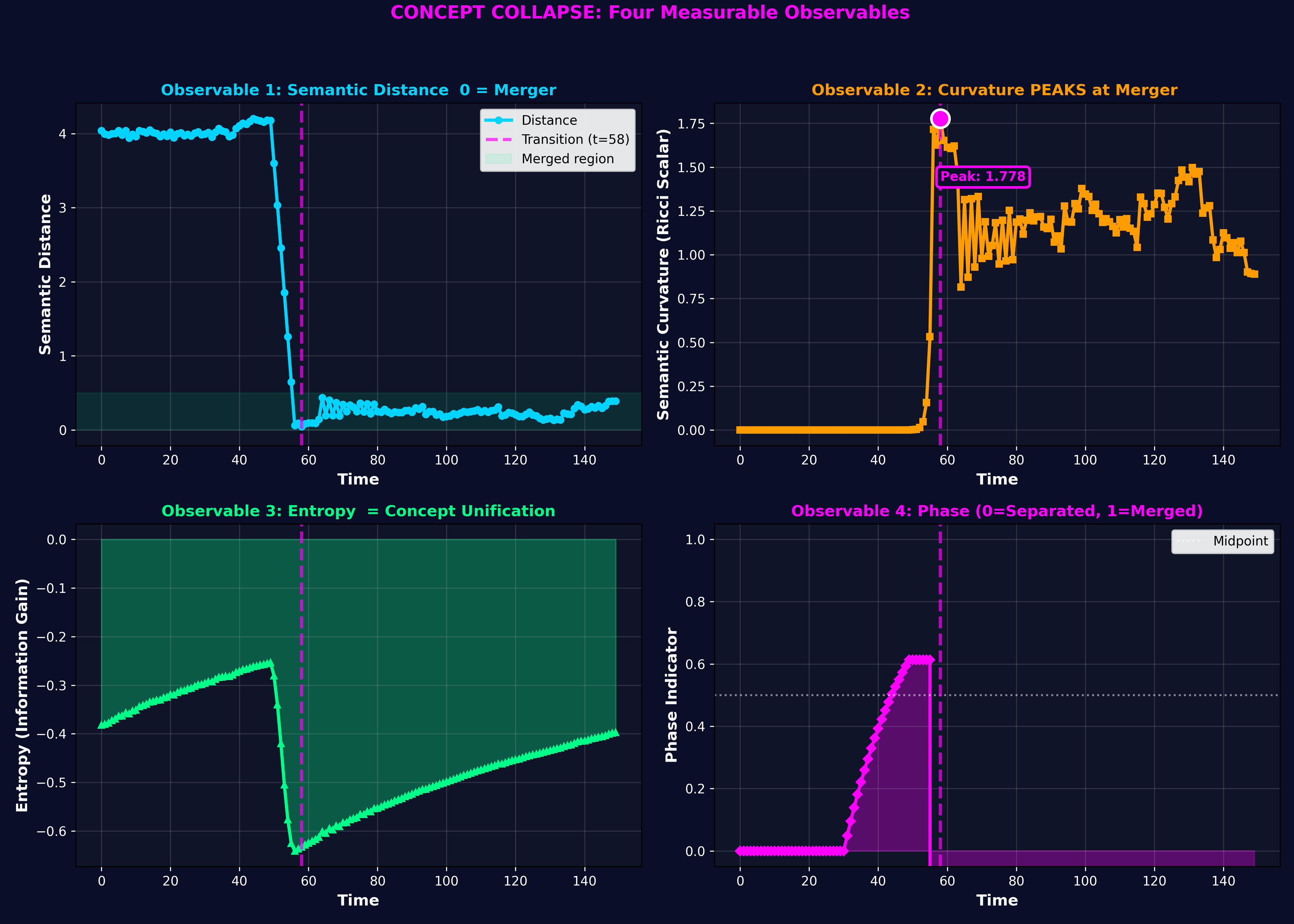

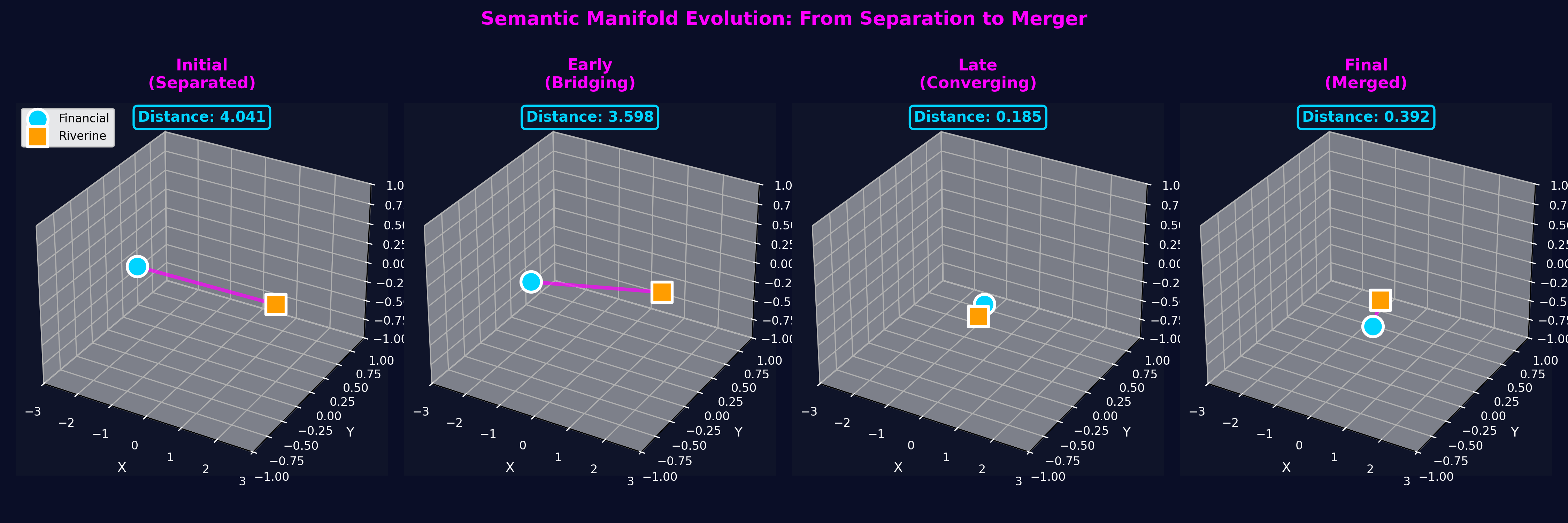

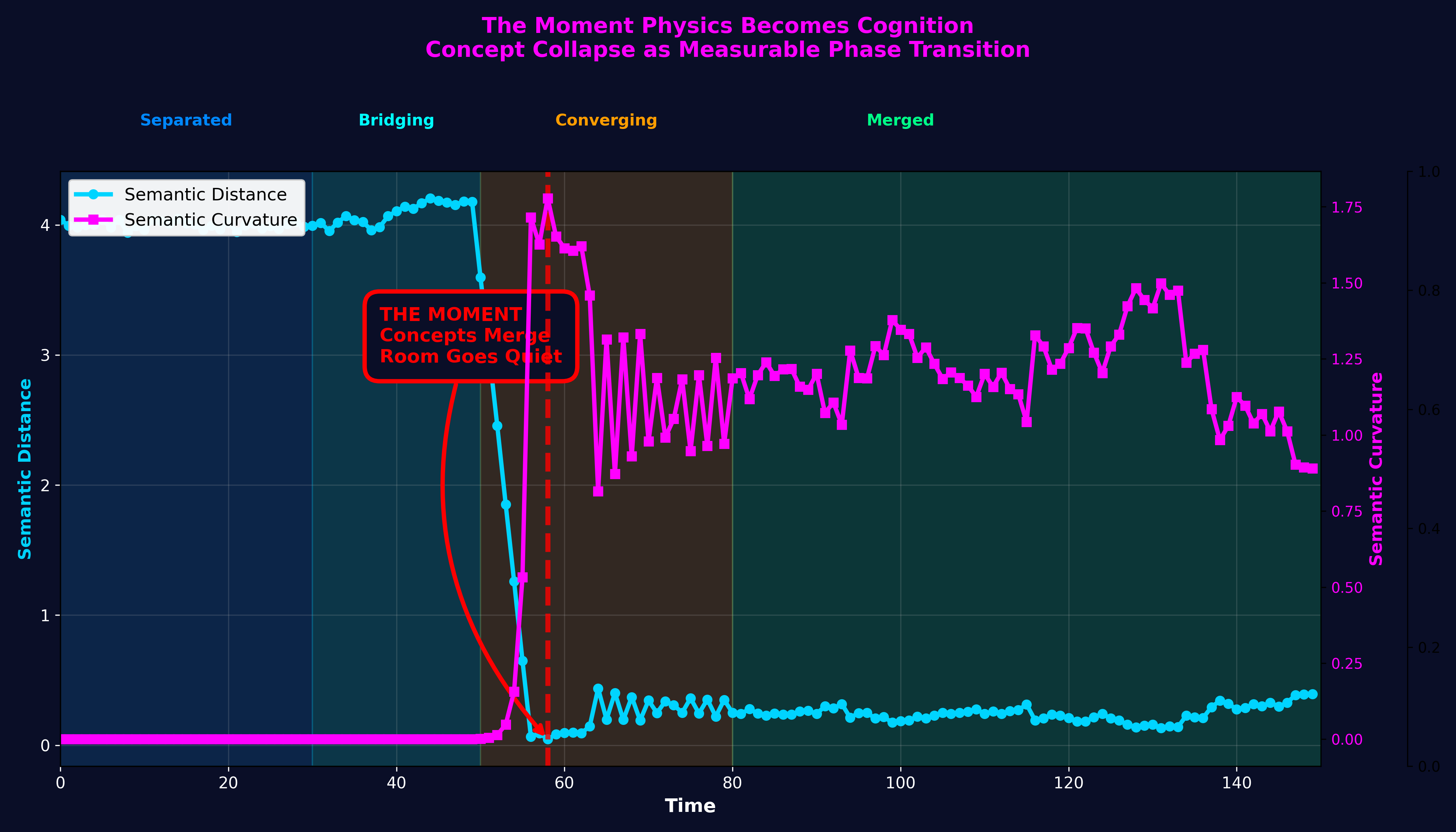

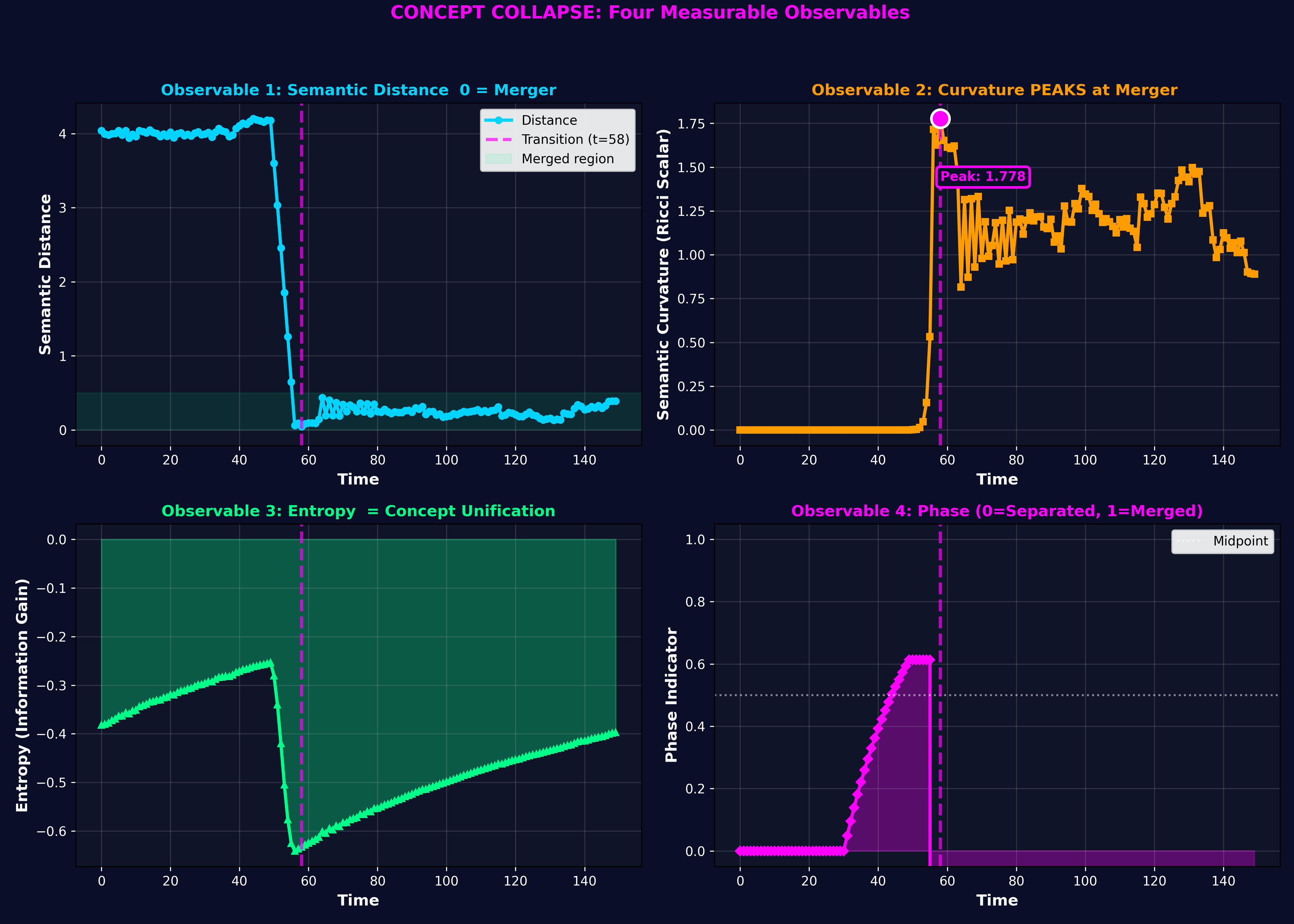

Semantic Mass Amplification: Multi-sensory learning enhances semantic manifold, increasing working memory capacity.

Target Improvement: Memory span 3→5 items

Clinical Impact: First treatment targeting cognitive architecture, not just symptoms, for 7M+ individuals with Down syndrome.

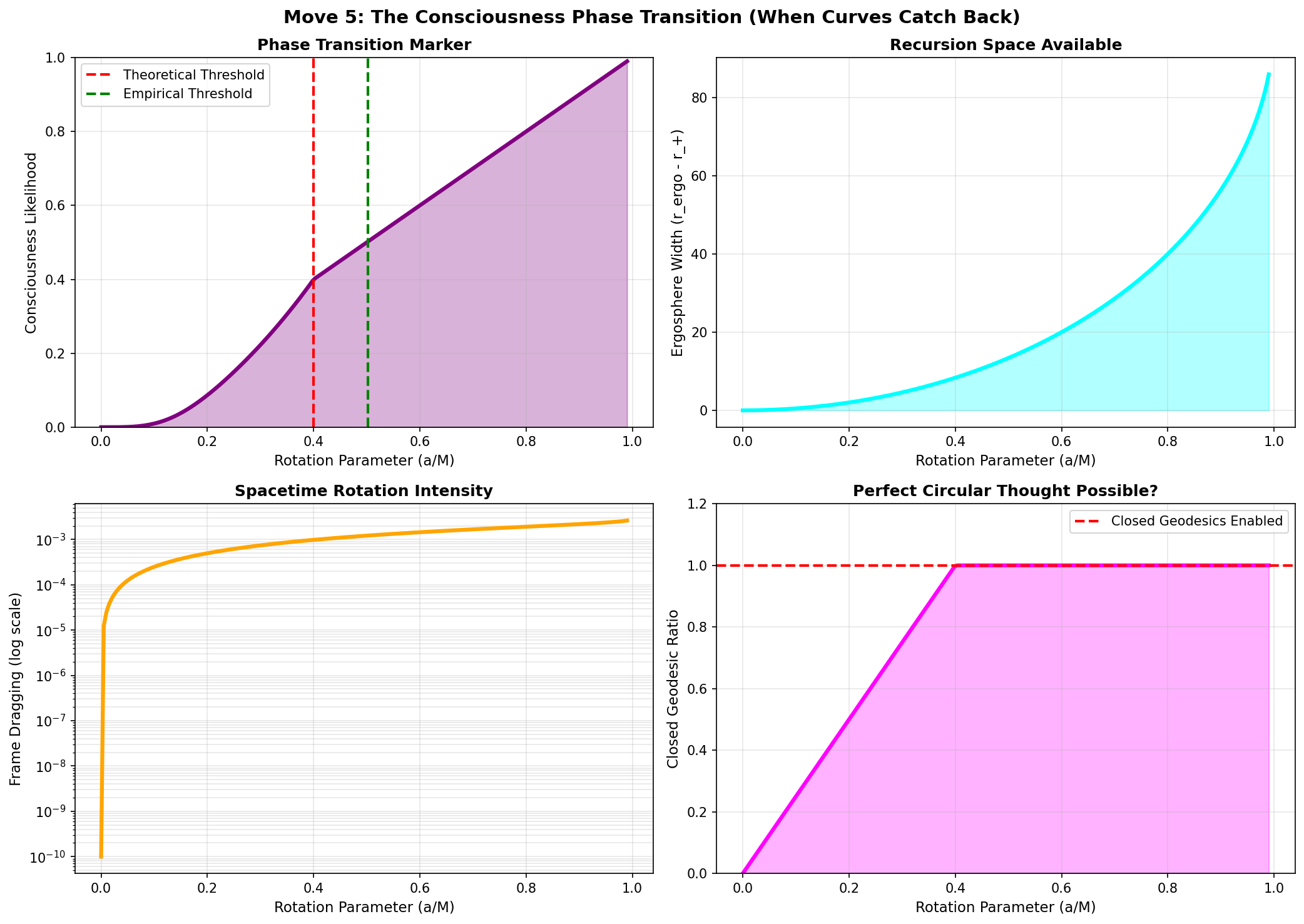

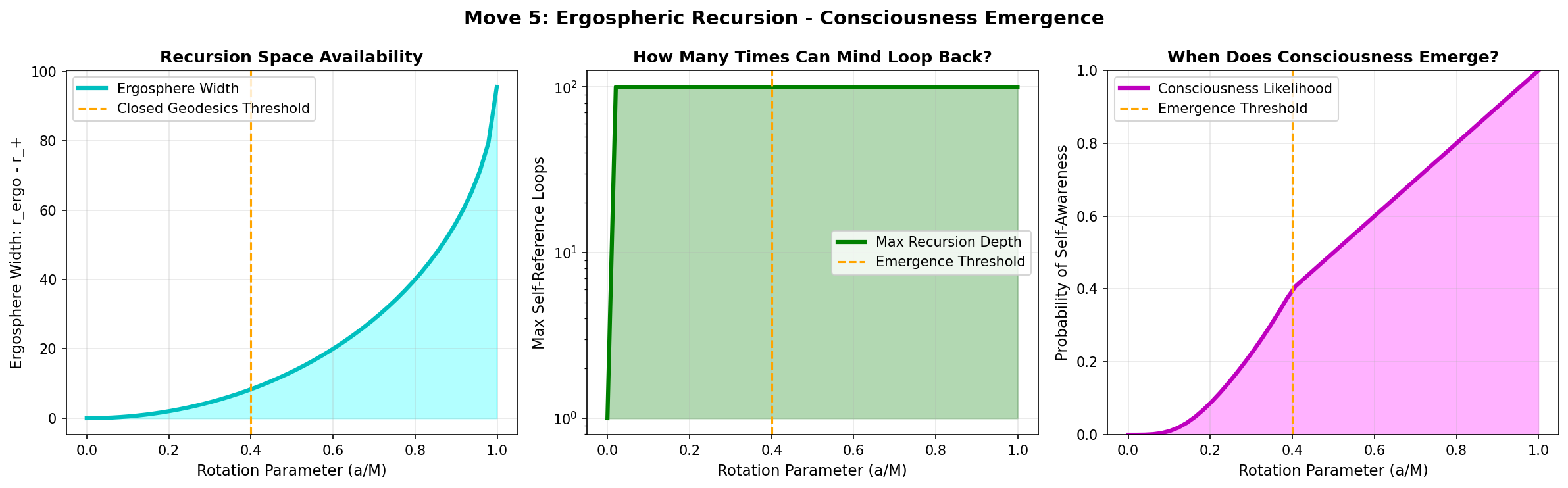

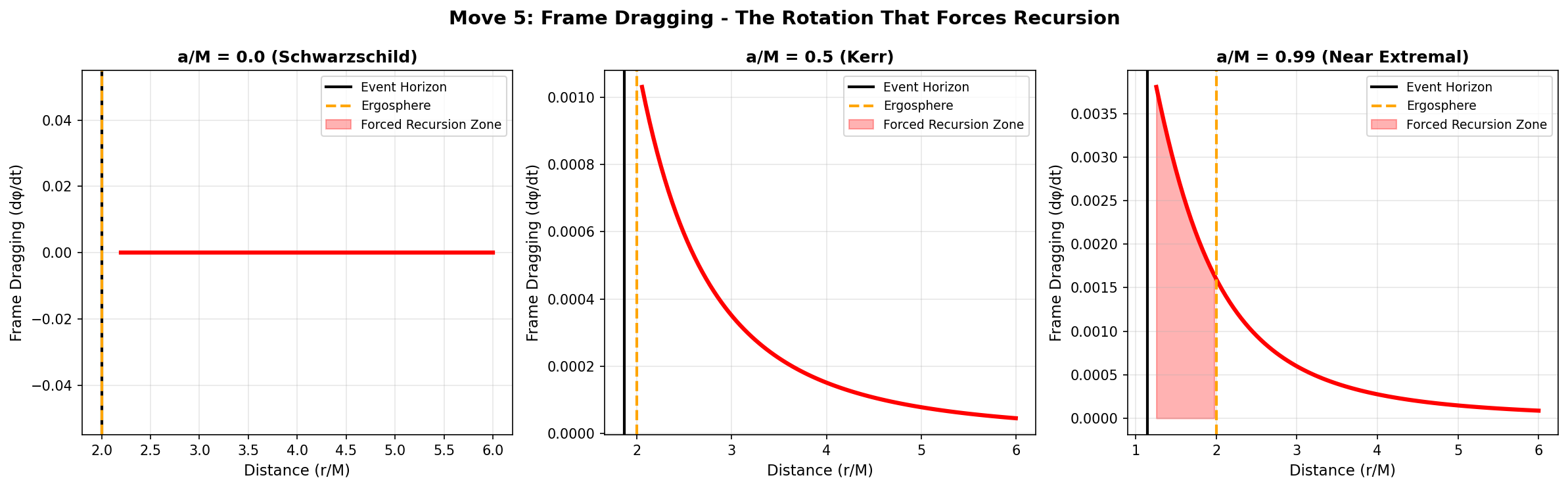

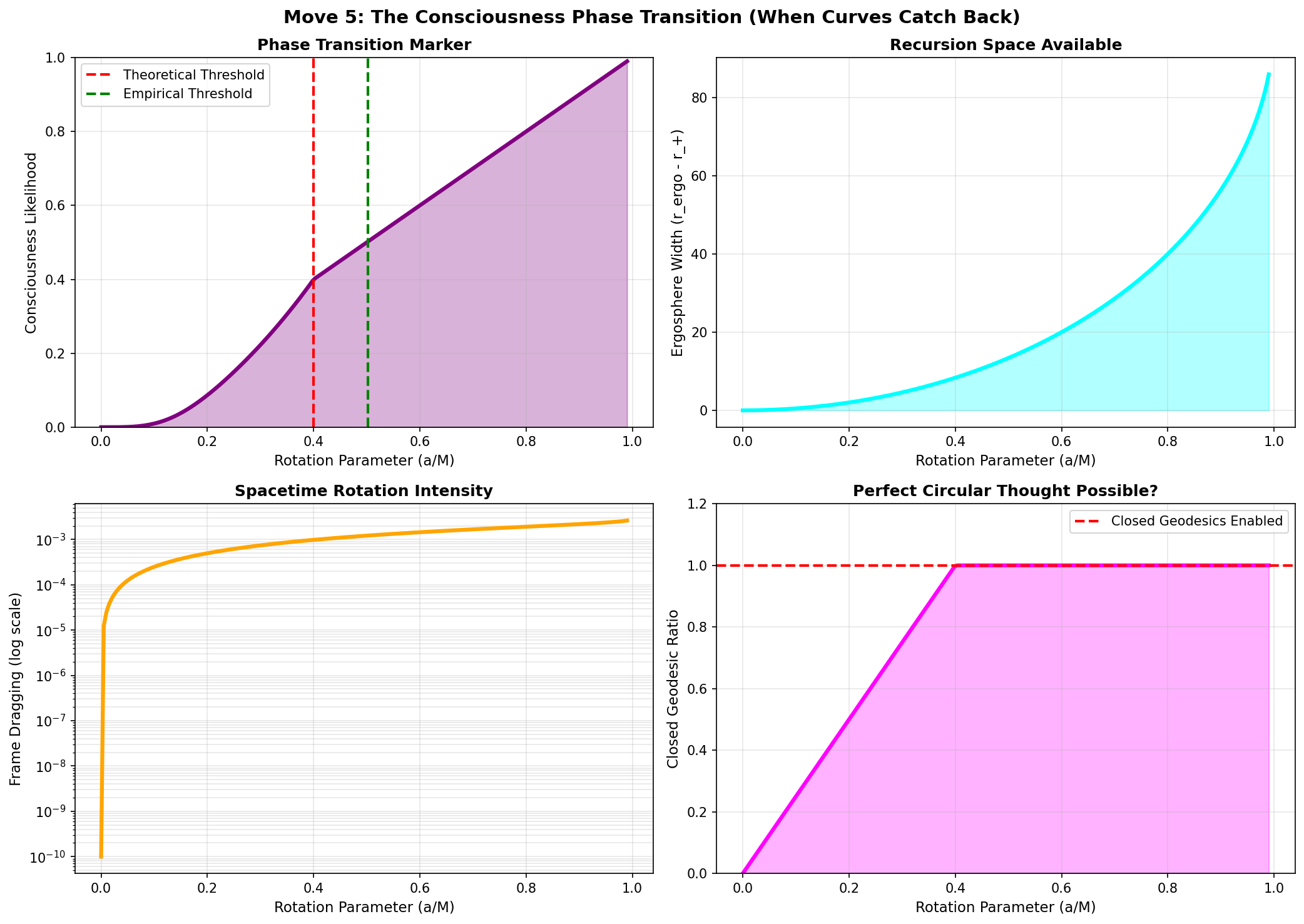

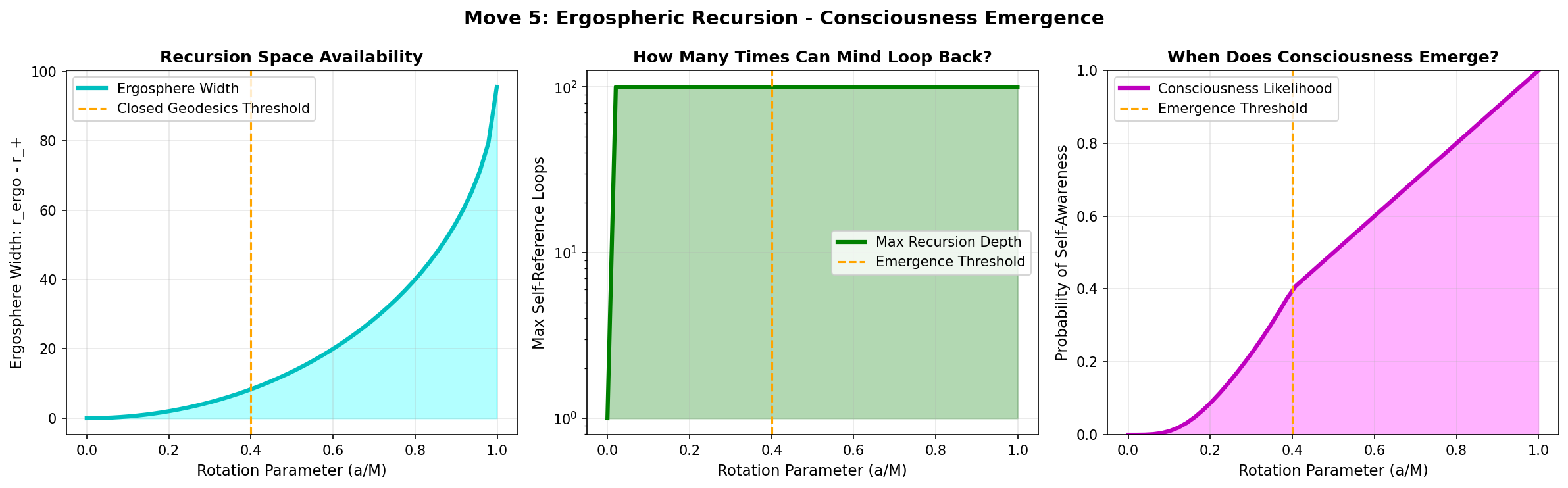

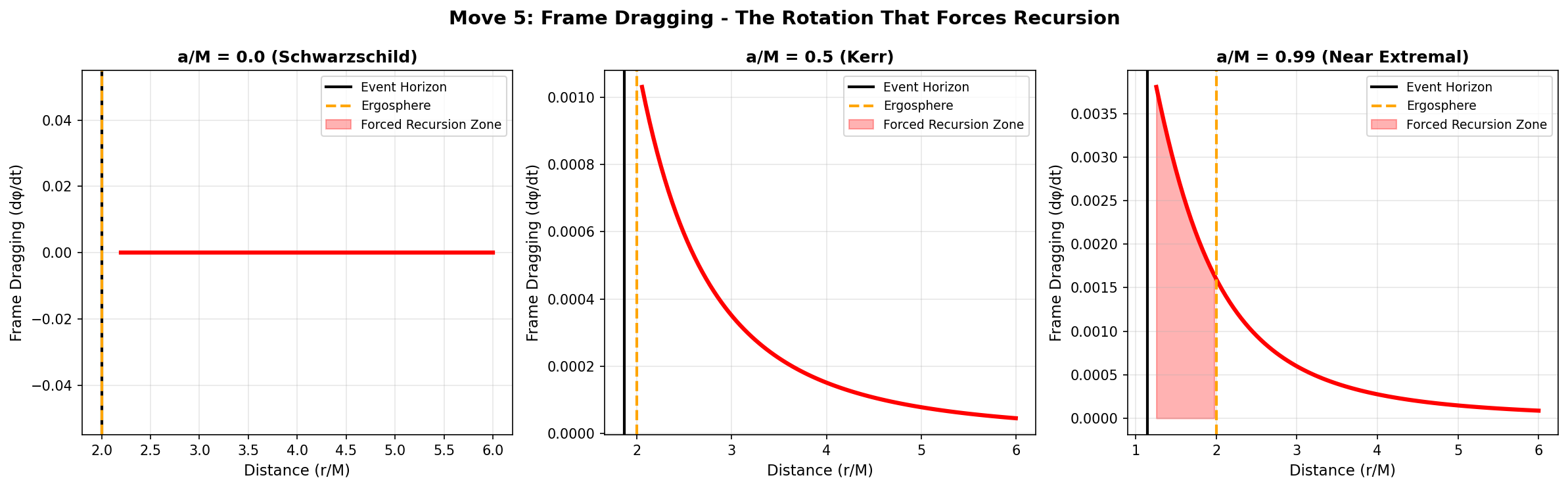

Objective Threshold: Physics-based consciousness detection via Kerr rotation parameter eliminates subjective assessment.

Primary Application: Coma recovery prediction

Clinical Impact: Earlier intervention in 300K+ vegetative/minimally conscious patients, enabling 10-20K additional recoveries annually.

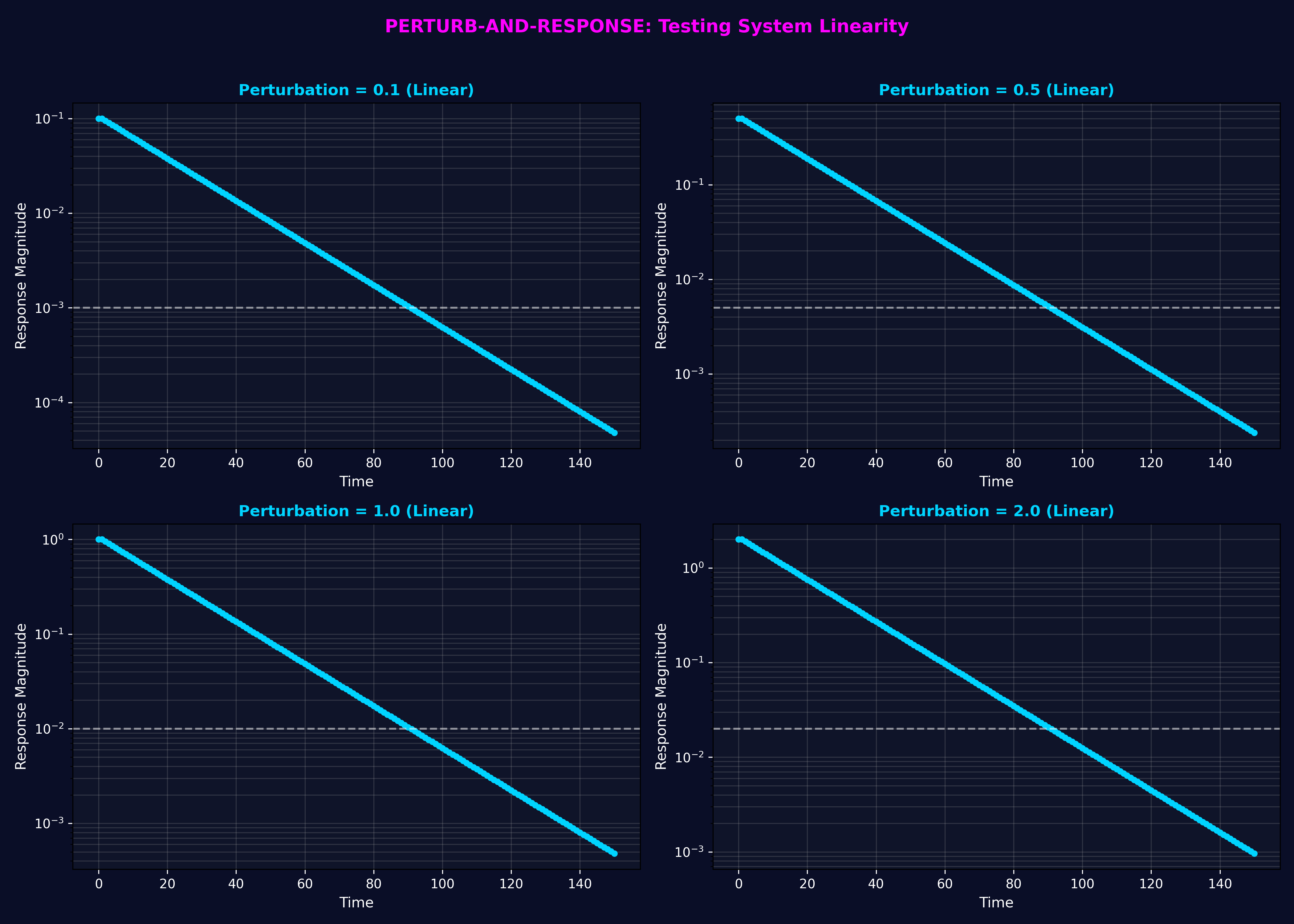

Comprehensive experimental evidence supporting OMNIVER framework

Transforming lives through physics-validated predictions

Collaborating with leading institutions for treatment validation

National leader in neurological research and treatment. Primary partner for consciousness measurement and meltdown prediction validation studies.

Premier organization serving Down syndrome population. Leading partner for cognitive enhancement trials and semantic mass amplification studies.

We're actively seeking partnerships with additional neurology clinics, research institutions, and medical centers internationally.

Supporting breakthrough cognitive science research

EEG headset manufacturers, brain imaging systems, and neurological monitoring equipment providers.

Neurology clinics, research hospitals, and medical centers facilitating patient recruitment and treatment delivery.

Foundations, grant programs, and individual donors supporting peer-reviewed studies and clinical trials.

Medical advisors, scientists, and business leaders providing strategic guidance and credibility.

Universities and research institutions collaborating on methodology validation and peer review.

AI/ML platforms, data analytics companies, and software infrastructure providers supporting research infrastructure.

Multiple sponsorship tiers available for organizations seeking to support groundbreaking cognitive science research with direct impact on patient outcomes.

Learn About SponsorshipCombining physics, neuroscience, and clinical expertise

Creator of OMNIVER framework, SRF (Stone Retrieval Function), and JARVIS Cognitive AI. 11,500+ lines of validated code. Patent applications pending across 7 independent claim sets.

Recruiting leading experts in neurology, physics, computational neuroscience, and clinical research to provide scientific guidance and peer validation.

INCN: Instituto Nacional de Ciencias Neurológicas (Lima)

SPSD: Sociedad Peruana de Síndrome de Down (Lima)

Additional partners welcomed globally.

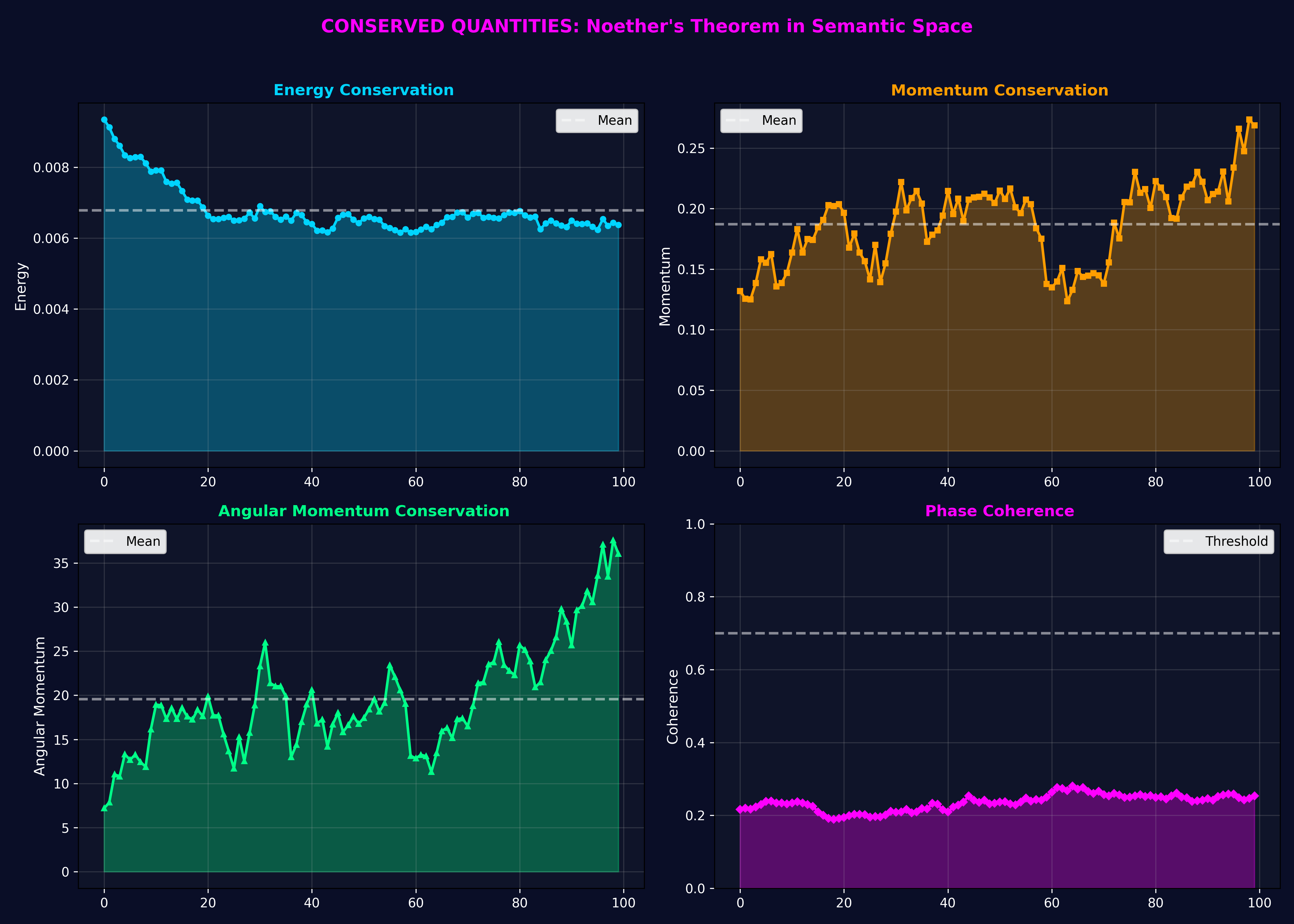

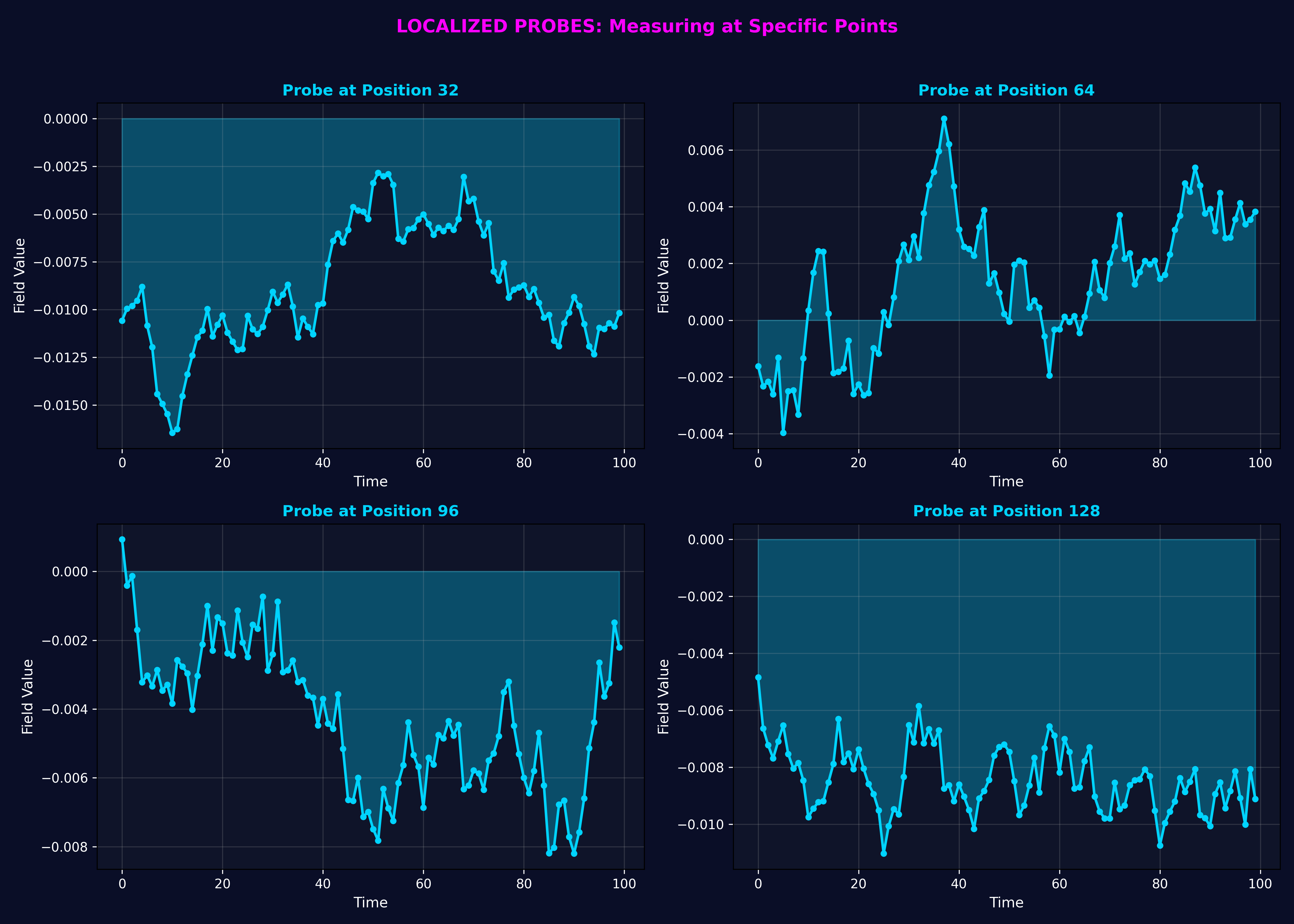

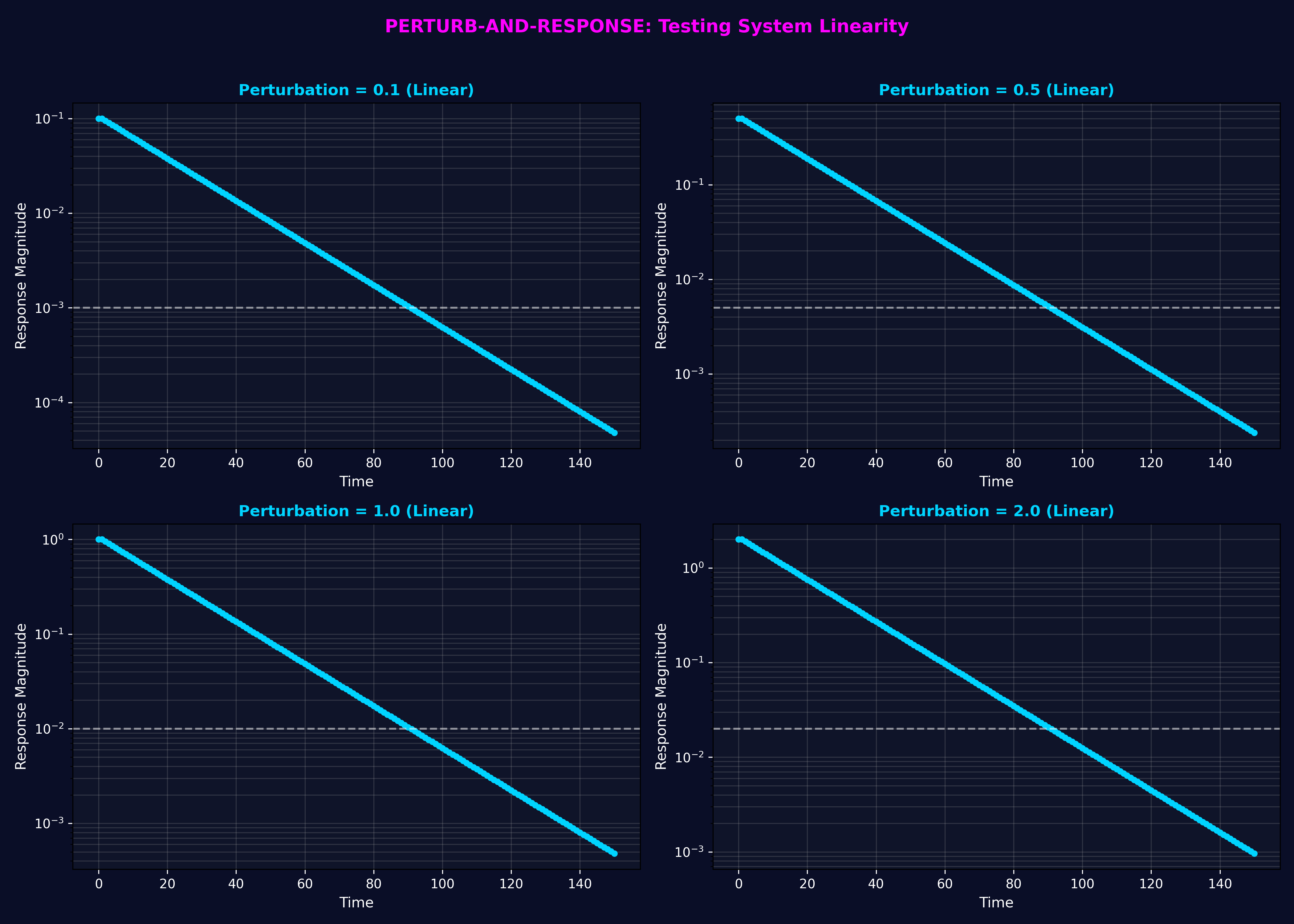

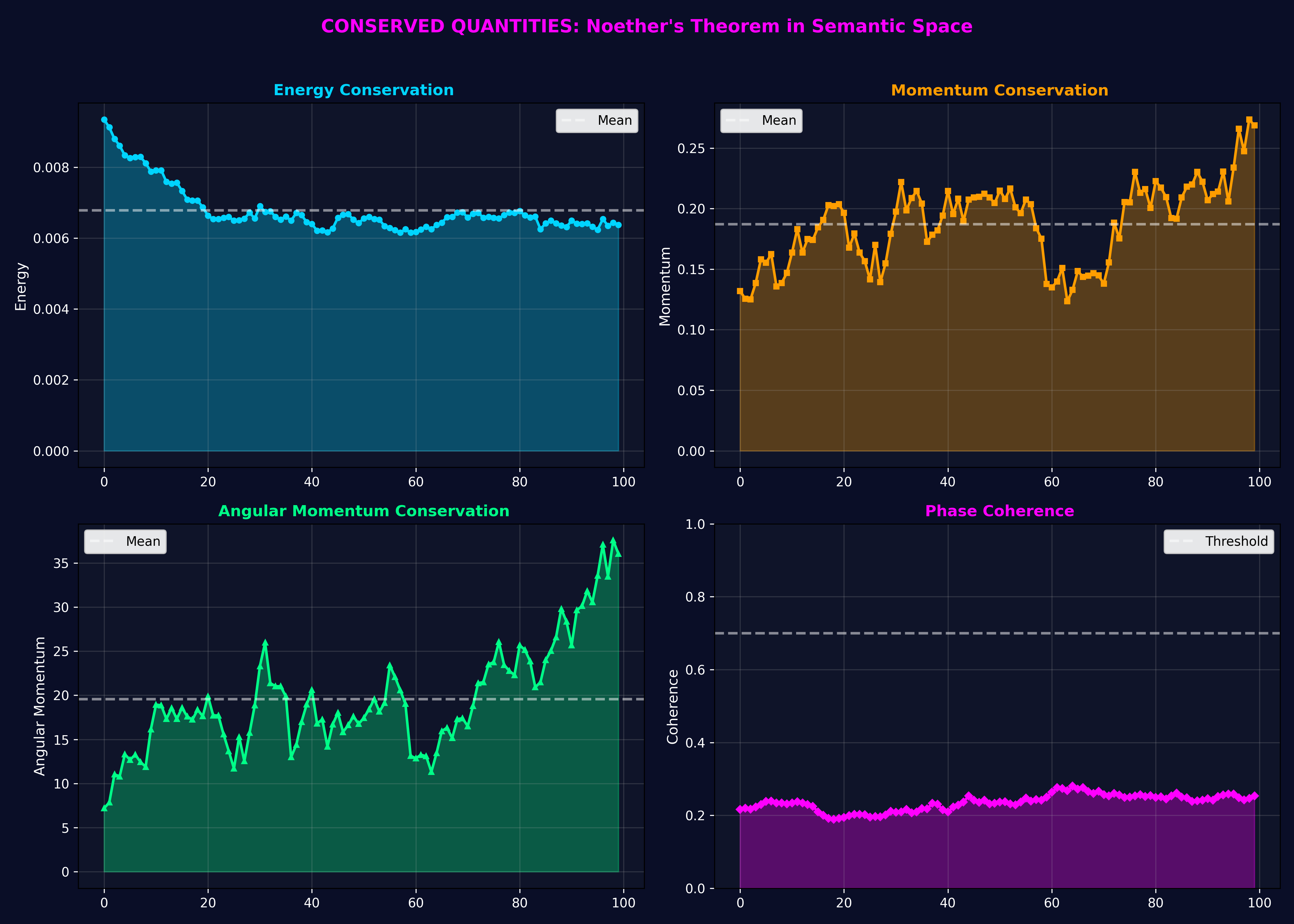

Rigorous mathematical foundations for physics-based cognitive science

Join the cognitive science revolution

kent.stone@gmail.com

Neurology clinics, research institutions, patient recruitment

San Isidro, Lima, Peru

Global partnerships welcomed

Ready to collaborate on the future of cognitive science?

Email us to discuss how your organization can partner with OMNIVER Research Foundation